Basic Tips for Successful Prompting

Here are some pointers to get the most out of Survivalist.ai’s offline AI app, which you can download here.

Be clear and specific. Avoid jargon, slang and ambiguous terms when possible. This will ensure the AI understands your request and generates relevant responses.

If you decide to change topics, it’s best to start a new conversation by touching the + button in the navigation bar. This is because the AI uses the context of your prior conversation to help craft its response.

Break down complex tasks into more manageable sub-tasks or sub-questions to help the AI generate more focused responses.

Provide brief guidance of the type of response you are looking for to steer the AI in the right direction.

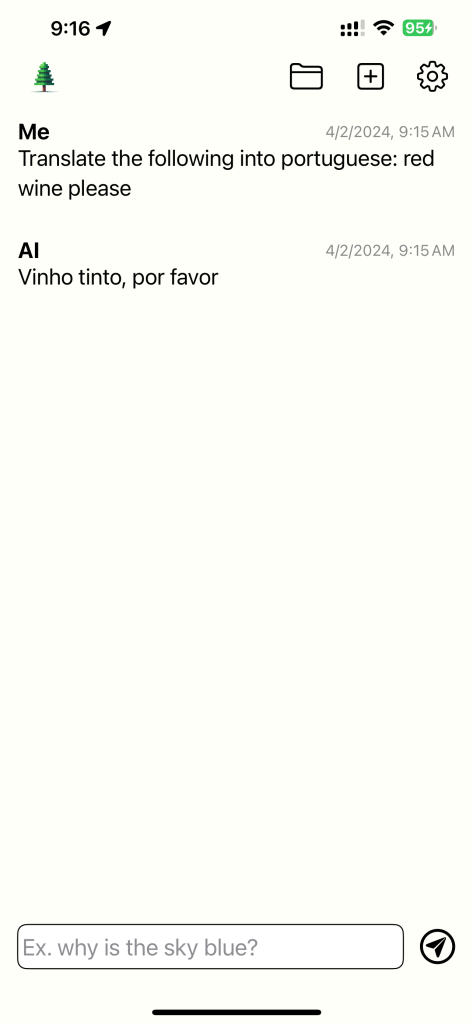

For example, saying “Translate the following text into Portuguese: red wine please” gives clear instruction to the AI on what it should do with text after the colon.

If you continue getting poor responses, also consider:

- Rephrasing your query using different terms

- Trying a larger model. You can select a new model through the dropdown menu by touching the gear icon in the navigation bar.

Using a Larger LLM

Very large LLMs have exhibited sophisticated abilities that seem to emerge suddenly. As we are working with limited processing and storage, our models don’t approach these levels, but in general the more data an AI model is trained on, the better its results should be.

However, this is not always the case, so it’s good practice to try different AI models for different use cases.

Some models are trained specifically for Q&A, some are trained to follow instructions well, others are trained on identifying images or to be good at chatting back and forth.

The models we have chosen are multipurpose.

Increase the Context Window

This gives the AI more of your prior conversation to consider, however it comes at a cost of speed and memory consumption. In the small devices we are working with, the slowdown can be more pronounced.

More technical explanation: the context window in Survivalist.ai can be set from 256 to 1024 tokens and is limited by the device’s processing speed and memory. A token is a snippet of text that the model has been trained with. It can be a whole word, but more often is part of a word. The context window includes the tokens in your query as well as the AI’s prior response, and that is the entirety of what the LLM has to consider when generating its response. That’s why breaking up your query into sub-queries sometimes can lead to better results.

Think of the AI as a smart person with a very short term memory.

Increase/Decrease the Temperature

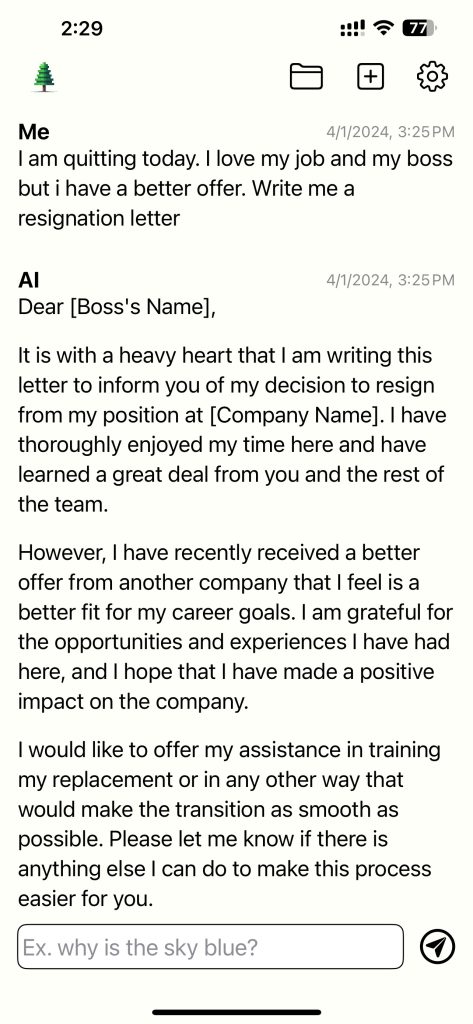

Increasing the temperature increases the randomness of the generated response. If you would like more creativity in the AI’s response, raise this setting. Having a high temperature might be more apt for generating works of fiction, innovative ideas, letters, poems and lyrics.

Lower the temperature for more deterministic results.

More technical explanation: in the final steps of generating an output, the AI creates a probability distribution over the next possible words from the context it has been given and selects from that distribution. Increasing the temperature creates a flatter distribution, and decreasing it creates a steeper distribution. Or put another way, increasing the temperature makes lower probability outputs more likely and higher probability outputs less likely.